Our Knowledge

We are dedicated to sharing the knowledge that we’ve learned with our community through the tech radar, meetups, blog, and podcast.

What is the radar?

An Opinionated map of the latest technologies and trends in the Israeli Tech industry. The 9th edition of the Israeli Tech Radar was built in collaboration with leading tech companies such as: Bluevine, CyberArk, Guesty, Redis and more.

2025-26 Tech Trends

Agentic SDLC: AI reshaping software development

AI is reshaping the entire SDLC—from fast, prompt-driven “vibe coding” to structured, AI-assisted development and broader organizational adoption.

Read more...

Agentic architectures powering autonomous AI systems

Beyond developer tools, there’s a clear shift toward building more advanced autonomous AI systems and standardizing how they interact.

Read more...

Modern data architectures for AI and real-time analytics

The demands of AI and real-time analytics are driving major changes in how data is stored, processed, and governed.

Read more...

FinOps and AIOps: Optimizing cloud & LLMs spend

As AI workloads grow more compute-intensive and token-based pricing models for LLMs become the norm, managing cloud costs has become a critical concern.

Read more...

Fullstack complexity and the shifting JavaScript ecosystem

The fullstack development landscape is increasingly shaped by frustration with rising complexity.

Read more...

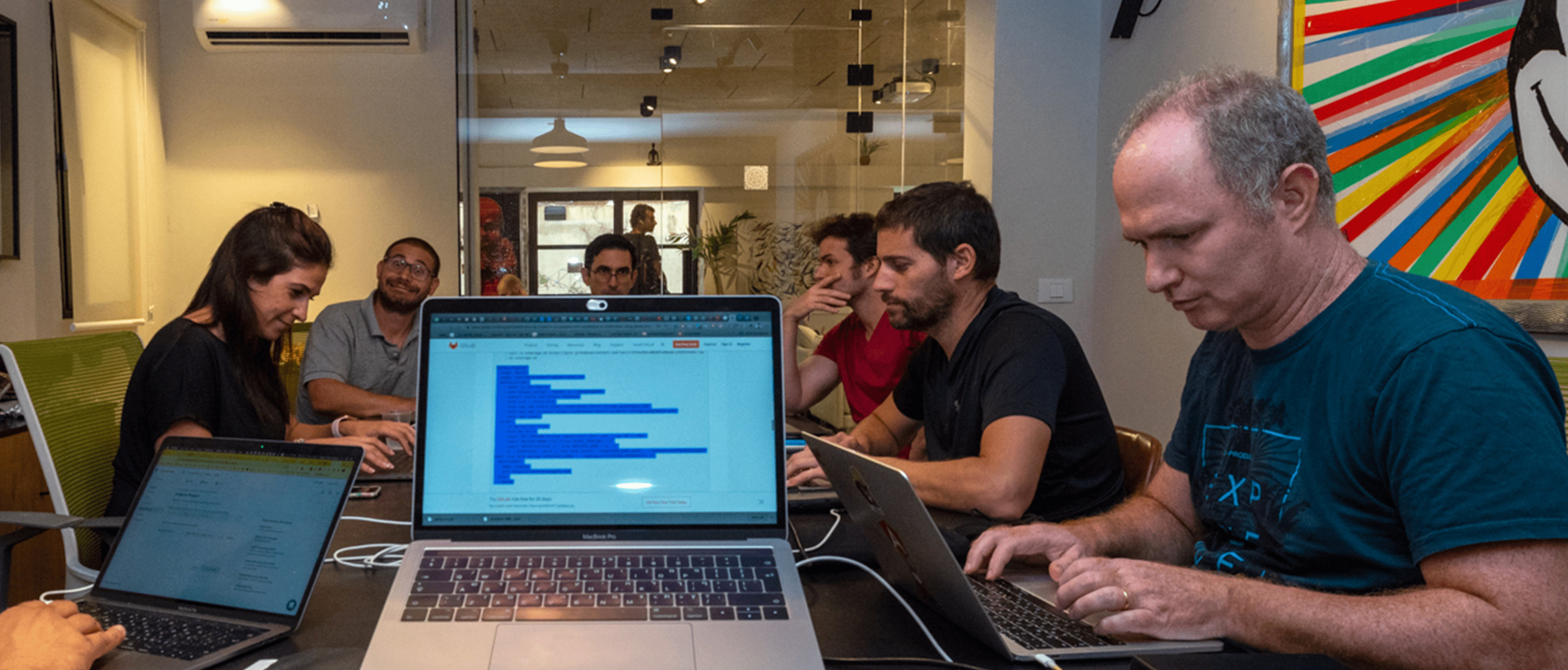

Tikal News

Tech Radar News May Edition

Individual Contributor panel

Join Our Upcoming Events

All Events >

Watch our Videos

All Videos >Read Our Blog

From AI Assistants to AI Agents: A DevOps Engineer’s Guide to AI’s Evolution

SQLFluff: Linting my way through dbt and finding peace (Kinda)

What We Talk About When We Talk About Next.js Best Practices?

Listen to our Podcast

17/03/2025

Configuration Management in Cloud Native Environments

Why is configuration management still a challenge in cloud-native environments? Haggai Philip Zagury & Peleg Porat discuss Kubernetes, GitOps, automation, and how to avoid costly misconfiguration mistakes.

Listen to the Podcast

25/05/2025

Observability in Cloud Native Environments

Haggai Philip Zagury hosts Aviva Peisach (Wix) and Semyon Teplitsky (Via) to discuss how teams approach observability, prevent failures, and give developers real visibility into production.

Listen to the Podcast

21/04/2025

Service Mesh in Cloud Native Environments

Microservices at scale need more than deployment—they need secure, reliable service-to-service communication. Haggai Philip Zagury and Uri Brodsky discuss how Istio and Envoy help teams manage traffic, security, and observability with Service Mesh.

Listen to the Podcast